Papers

[-] Gupta, Arshita, Tien Bau, Joonsoo Kim, Zhe Zhu, Sumit Jha, and Hrishikesh Garud. "Torque Based Structured Pruning for Deep Neural Network." In Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision, pp. 2711-2720. 2024.

Short Description: One of the most challenging aspects of working with current AI models is their enormous size. Although these models show extra-ordinary performance in simulated tasks, it is impossible to fit these models on hardware for any real world applications. Therefore, we constructed a novel channel pruning technique that would help in the reduction of both parameters and FLOPS (floating point operations per second) while still nearly maintaining the original model's accuracy and showing competitive results with other state-of-the-art structured pruning methods. We also showed that our method is easy to implement and can be integrated with common computer vision architectures , thus easing hardware requirements for large models.

[-] Gupta, Arshita. "Attentive Query Network." (2019).

Short description: Scene understanding is an important problem in modern high-level computer vision. Currently a lot of effort is being put in developing algorithms that will help in the 3D reconstruction of an entire scene. Various present solutions proposed require human annotation like masking thus increasing the dependency of these algorithms on humans. Further, once this masking is applied, they focus only on one object in the entire scene. We wish to not only create a 3D representation of the object but generate the entire scene from any user defined viewpoint different than the previously observed viewpoints. This work takes a deep dive into the novel idea of scene rendering using query networks [6] and further adds onto it a spatial attention mechanism. Unlike the other techniques, this method relies entirely on the self-sufficient learning of the machine and removes any involvement from humans.

[-] Arshita Gupta, Hetu Shah, Prashita Prathapan, Aniket Saha, Tushar Chonde. "Electronic Braille Reader and Tutor", ijesc (2016).

Short description: In the face of a digital world, communication on paper is being rapidly replaced by messengers, SMS services, e -book readers and the like. This primarily affects the lives of the 39 million blind people living worldwide, who have to either rely on audio books or avail the services of a visually able person, causing a grave disadvantage to their habit of reading. There is also a rising problem of increasing number of people turning accidentally blind, and hence the conventional methods of teaching Braille to such a class of visually challenged people are very less effective. The average test subject would never get acquainted to pages of Braille language texts, creating a need and hence a potential market for a singular device that performs the same action as pages of Braille characters. This paper, thus, presents a device: The ERT-Braille (Electronic Reader and Tutor- Braille) made to aid these differently abled persons. The two major objectives of ERT- Braille for the blind, are to permit access to digital matter (MODE 1) and also a self-tutor for learning the braille language for beginners(MODE 2) This device, consisting of a braille cell, is used to display characters from a Portable Document Format (PDF) file, as raised symbols on the cell. The self-tutor ability of the device helps to learn braille language through speech recognition using MFCC technique.

Awards

[1] Samsung Research America President’s award 2021.

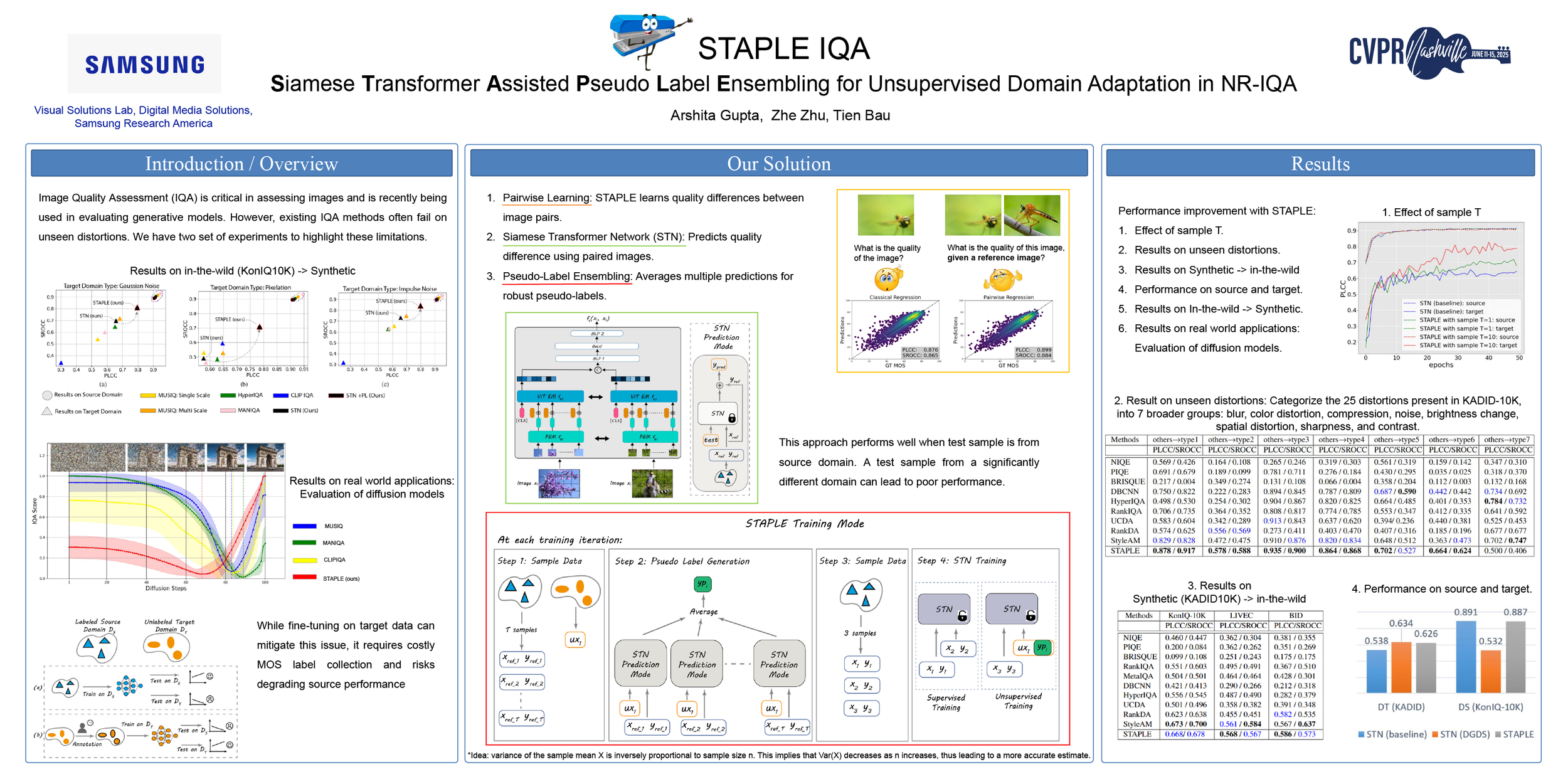

[-] STAPLE: Siamese Transformer Assisted Pseudo Label Ensembling for Unsupervised Domain Adaptation in No-Reference IQA

Arshita Gupta (Samsung Research America)*; Zhe Zhu (Samsung Research America); Tien Bau (Samsung Research America)